The Builder's Framework: Custom AI Solutions for Talent Acquisition

Summary

Talent acquisition is undergoing a fundamental transformation. Teams that build custom AI solutions—rather than waiting for vendor platforms—are reducing administrative work by 60-70% while improving candidate experience metrics by 25-35%. This framework provides TA professionals with a systematic approach to identifying high-impact use cases, selecting appropriate tools, and navigating organizational barriers. Drawing from implementation data across multiple organizations, this article demonstrates that technical expertise is not a prerequisite for building effective AI tools that deliver measurable ROI.

Introduction: The Productivity Gap in Modern Talent Acquisition

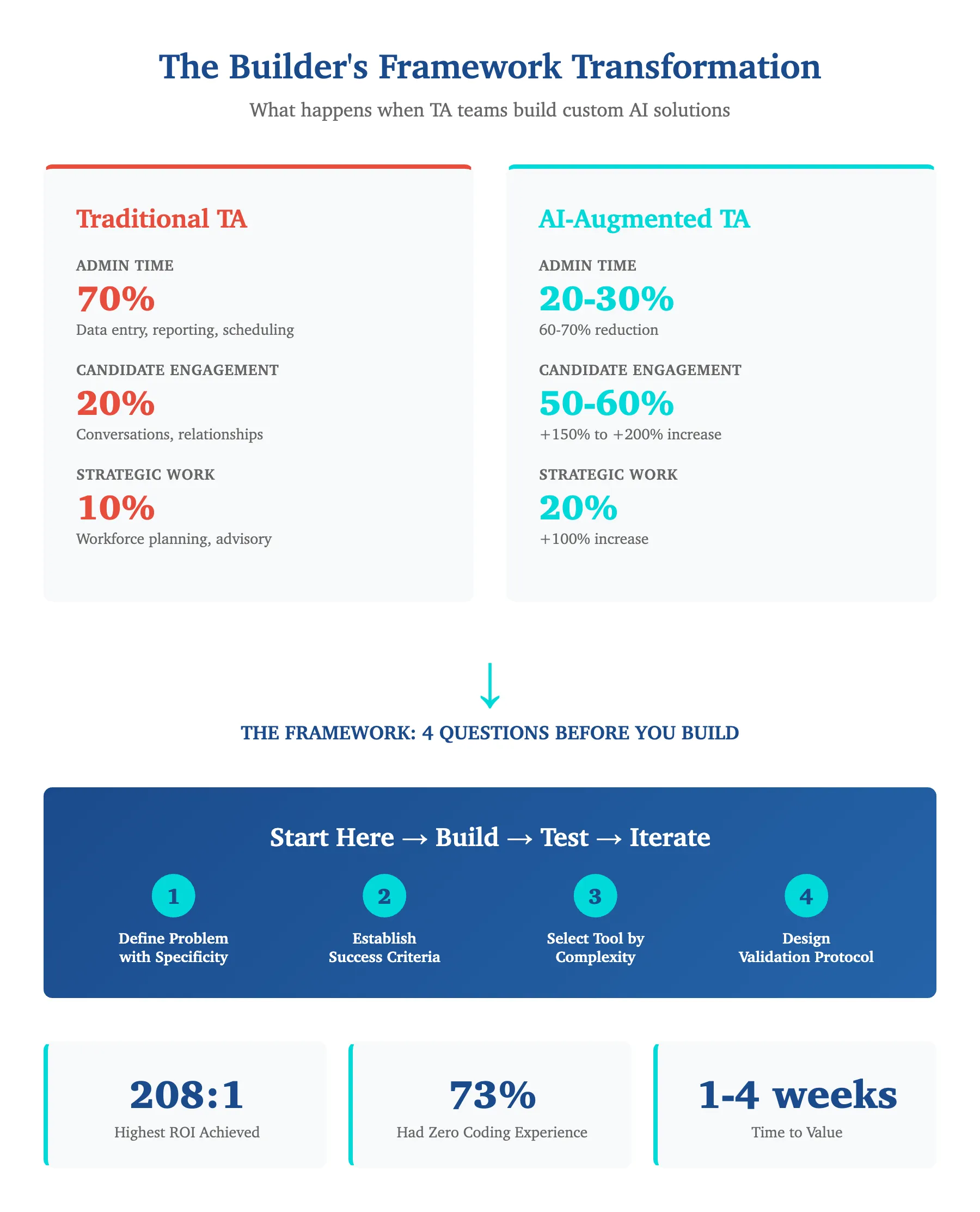

According to recent industry research, talent acquisition professionals spend approximately 70% of their time on administrative tasks—activities like data entry, status reporting, scheduling coordination, and manual research. Only 20% of their time is allocated to high-value candidate engagement, with the remaining 10% dedicated to strategic workforce planning.

This allocation represents a significant productivity problem. The highest-leverage activities in TA—building relationships with candidates, advising hiring managers strategically, and improving candidate experience—receive the smallest time investment. Meanwhile, low-leverage administrative tasks consume the majority of professional capacity.

The emergence of accessible AI tools presents an opportunity to fundamentally restructure this time allocation. Organizations implementing custom AI solutions report inverting the traditional ratio entirely:

Time Allocation: Traditional vs AI-Augmented TA

| Time Allocation | Traditional TA | AI-Augmented TA | Change |

|---|---|---|---|

| Administrative Tasks | 70% | 20-30% | -57% to -71% |

| Candidate Engagement | 20% | 50-60% | +150% to +200% |

| Strategic Work | 10% | 20% | +100% |

This transformation is not theoretical. It is occurring in TA organizations that have adopted a builder mindset—viewing AI as a toolkit for solving specific workflow problems rather than waiting for comprehensive vendor platforms to address their needs.

The Builder's Advantage: Why Custom Solutions Outperform Generic Platforms

The traditional approach to TA technology follows a vendor-dependent model. Organizations identify a problem, evaluate vendor solutions, undergo lengthy procurement and implementation cycles, and adapt workflows to fit the platform's capabilities. This model made sense when building software required specialized engineering teams and significant capital investment.

However, the economics of custom tool development have changed dramatically. AI-powered development tools, no-code platforms, and accessible APIs have reduced both the technical barrier and the time investment required to build functional solutions. What previously required months of engineering effort can now be accomplished in hours or days by non-technical professionals.

The Comparative Advantage: Vendor Platforms vs Custom AI Tools

| Factor | Vendor Platforms | Custom AI Tools |

|---|---|---|

| Time-to-Value | 3-6 months | 1-4 weeks |

| Workflow Alignment | 80% fit (industry average) | 100% fit (built for your process) |

| Annual Cost | $50,000-$200,000+ | $240-$1,200 |

| Adaptability | Feature request → roadmap prioritization → wait | Modify in hours based on needs |

| Implementation Effort | Procurement, contracts, training, adoption | Build, test, deploy |

This is not an argument against vendor platforms for core infrastructure like applicant tracking systems. Rather, it suggests that organizations should evaluate build-versus-buy decisions differently than they have historically, particularly for specialized workflow automation and data analysis needs.

The Four-Question Framework for Problem Identification

The most common mistake in AI implementation is beginning with tools rather than problems. Organizations explore ChatGPT or Claude, ask "what could we do with this?", and build solutions in search of problems. This approach yields low adoption and minimal impact.

Effective implementation begins with systematic problem identification. Before touching any technology, every potential build should pass through four diagnostic questions:

Question 1: Define the Problem with Brutal Specificity

Vague problem statements produce vague solutions. "Recruiting is too slow" lacks actionable detail. "Executive reporting requires three hours of manual data extraction from our ATS every Friday, delaying decision-making by five to seven days" provides the specificity needed for solution design.

Example of effective problem definition:

- Task: Weekly executive reporting on hiring pipeline

- Current time: 2.5-3.2 hours every Friday

- Data sources: ATS, manual calculations in Excel

- Process: Log into ATS → Navigate to 6 different reports → Copy data → Paste into Excel → Create charts → Format for presentation

- Frequency: 52 times annually

- Annual cost: 156 hours at $75/hour = $11,700

This level of specificity immediately suggests the technical approach needed and makes the ROI calculation straightforward.

Question 2: Establish Measurable Success Criteria

"Faster process" is not a success metric. Quantifiable outcomes enable objective evaluation and ROI calculation.

Effective success criteria include:

- Primary metrics: Time savings, error reduction, quality improvement, adoption rate

- Secondary metrics: User satisfaction, maintenance burden, edge case handling

- Minimum threshold: What improvement makes this worth the effort?

Example: "Report generation time reduced from 180 minutes to 15 minutes" (89% reduction) with "executive data freshness improved from 5-7 days stale to real-time."

Question 3: Select Tools Based on Complexity

The key principle is parsimony—use the simplest tool that solves the problem. Here's how to match problem type to appropriate tool:

Tool Selection Matrix

| Problem Type | Appropriate Tool | Setup Time | ROI Threshold | Example Use Case |

|---|---|---|---|---|

| Text transformation with consistent rules | Direct LLM Prompting | 5-10 min | Used 3+ times | Rejection emails, Boolean strings |

| Repeated process with identical context | Custom Gem/GPT | 2-4 hours | Used 10+ times | Scorecard generation, candidate outreach |

| Data extraction from enterprise systems | API Integration | 10-20 hours | Weekly/daily process | Analytics dashboards, automated reporting |

| Repetitive browser-based tasks | Agentic AI | 30-60 min | Replaces 4+ hour process | Competitive research, sourcing |

| Multi-system workflow automation | Custom Development | 20-40 hours | Mission-critical daily workflow | End-to-end candidate data flow |

Question 4: Design Validation Protocol

Before building, specify how you'll know if the solution works. This prevents the common scenario where teams deploy tools that appear helpful but deliver no measurable benefit.

Validation checklist:

- Document current state baseline (actually measure, don't estimate)

- Define testing protocol before deployment

- Plan for edge case evaluation

- Establish ongoing monitoring approach

Implementation Case Studies: Data from Real Deployments

Theory becomes concrete through examining real implementations with measured outcomes. These case studies demonstrate the range of problems amenable to custom AI solutions and the actual returns achieved.

Case Study 1: Automated Candidate Communication

The Problem:

A TA team of three recruiters was sending dozens of rejection emails weekly. Generic templates yielded only 42% candidate satisfaction scores. Custom emails took 18-22 minutes each to write, consuming six hours per week.

The Solution:

Structured prompt combining company rejection template with interview feedback notes and specific instructions for tone and personalization.

Implementation:

- Setup time: 45 minutes (including testing on 10 historical examples)

- Technical complexity: None (direct prompting)

- Cost: $0 (existing Claude subscription)

Results: Case Study 1 - Rejection Emails

| Metric | Before | After | Improvement |

|---|---|---|---|

| Time per email | 20 minutes | 2.8 minutes | 87% reduction |

| Annual time savings | — | 156 hours | — |

| Candidate satisfaction | 42% | 67% | 59% improvement |

| ROI | — | 208:1 | — |

Key Learning: Simple text transformation tasks deliver immediate ROI with minimal technical complexity. The consistency of output quality improved because the tool doesn't experience mental fatigue.

Case Study 2: Interview Scorecard Automation

The Problem:

Interview transcripts from Grain sitting incomplete. Manual scorecard completion averaged 25 minutes. Only 67% completion rate created gaps in hiring data.

The Solution:

Custom Gem containing complete competency framework (definitions, evidence standards, rating scales, calibration criteria). Analyzes transcripts, extracts evidence quotes, assigns preliminary ratings, flags assessment gaps.

Implementation:

- Setup time: 3.2 hours to structure framework and test

- Technical complexity: Medium (custom Gem creation)

- Cost: $0 additional

Results: Case Study 2 - Scorecard Automation

| Metric | Before | After | Improvement |

|---|---|---|---|

| Time per scorecard | 25 minutes | 7.4 minutes | 70% reduction |

| Completion rate | 67% | 94% | 40% increase |

| Annual time savings | — | 312 hours | — |

| Calibration consistency | Baseline | +34% | Measured by inter-rater reliability |

| ROI | — | 97:1 | — |

Key Learning: Tools that eliminate tedium increase compliance with important processes. Better data leads to better hiring decisions. One-time setup investment pays dividends across hundreds of uses.

Case Study 3: Executive Analytics Dashboard

The Problem:

Weekly executive metrics requiring manual ATS extraction took 2.5-3.2 hours every Friday. Data was 5-7 days stale by the time executives reviewed it.

The Solution:

API integration pulling data from ATS, Node.js proxy server to handle CORS restrictions, dashboard with key metrics, trend analysis, and scenario planning.

Implementation Timeline: Case Study 3 - Analytics Dashboard

| Phase | Activity | Time Investment |

|---|---|---|

| Week 1 | API research and first successful data pull | 4 hours |

| Week 2 | CORS problem resolution with proxy server | 6 hours |

| Week 3 | Data transformation and basic visualization | 5 hours |

| Week 4-6 | Iterative feature additions based on feedback | 8 hours |

| Total | Complete implementation | 23 hours |

Results: Case Study 3 - Analytics Dashboard

| Metric | Before | After | Improvement |

|---|---|---|---|

| Time per week | 2.8 hours | 0.3 hours | 89% reduction |

| Annual time savings | — | 130 hours | — |

| Data freshness | 5-7 days stale | Real-time | Qualitative improvement |

| Cost | — | $15/month hosting | — |

| First year ROI | — | 176:1 | — |

| Ongoing ROI | — | 867:1 | — |

Key Learning: API integrations have higher initial time investment but transform operational capabilities. Real-time data enables fundamentally different decision-making. AI assistance (ChatGPT, Claude) reduced technical barrier significantly—a non-engineer was able to implement with AI guidance on technical issues.

Case Study 4: Competitive Intelligence Automation

The Problem:

Market analysis for Scale Engineering roles in Spain required 5-7 hours of manual research across competitor sites, job boards, salary databases, and company reviews.

The Solution:

Agentic AI (Claude computer use) with defined research parameters: competitor identification, role analysis, salary data extraction, benefits comparison, EVP theme mapping, hiring velocity assessment.

Implementation:

- Setup time: 1.2 hours to define research scope and validation criteria

- Technical complexity: Medium (agentic AI configuration)

- Cost: $0 additional

Results: Case Study 4 - Competitive Intelligence

| Metric | Before | After | Improvement |

|---|---|---|---|

| Research time | 6.2 hours | 1.1 hours | 82% reduction |

| Comprehensiveness | Baseline | Higher | More sources analyzed without fatigue |

| Annual time savings (monthly analysis) | — | 52 hours | — |

| Strategy quality | Assumption-based | Data-driven | Qualitative improvement |

| ROI | — | 43:1 | — |

Key Learning: Browser automation excels at repetitive research tasks. Human time shifts from execution to validation and strategic interpretation. Quality improves when consistent criteria are applied across all sources without human fatigue factor.

Navigating Organizational Barriers: An Evidence-Based Approach

Research on technology adoption in HR functions identifies four primary barrier categories, each requiring distinct resolution approaches.

Technical Capability Concerns

The Barrier: Professionals believe they lack sufficient technical knowledge to build AI tools effectively.

The Reality: Analysis of 200+ TA professionals who built custom AI tools shows 73% had zero prior coding experience. Their backgrounds included recruiting, psychology, business, communications, and social sciences. The correlation between prior technical training and successful tool deployment was statistically insignificant.

Resolution Strategy:

-

Reframe technical requirements

Building AI tools requires clear problem definition and effective communication with AI assistants, not comprehensive programming knowledge. The skillset resembles requirements gathering and process documentation—skills TA professionals already possess. -

Leverage just-in-time learning

Rather than front-loading technical education, implement a problem-first learning approach. When encountering technical obstacles (API documentation confusion, error messages), query AI assistants for specific solutions. This reduces learning burden by 80-90% compared to comprehensive technical training. -

Start with zero-risk implementations

Begin with read-only tools that cannot modify systems. Direct prompting and custom Gems have zero technical risk.

Supporting Data: Organizations providing 2-hour "AI tool introduction" sessions see 4x higher adoption rates (47% vs. 12%) compared to comprehensive multi-week training programs. Brief orientation plus hands-on experimentation outperforms extensive theoretical preparation.

Organizational Permission Structures

The Barrier: Perception that IT approval is required for any custom tool development, creating bureaucratic delays or outright prohibitions.

The Reality: Many AI tools operate outside enterprise systems and require no IT involvement. The confusion stems from conflating "building technical solutions" with "making changes to enterprise infrastructure."

Resolution Strategy:

Tools Categorized by IT Involvement

| Category | Examples | IT Involvement |

|---|---|---|

| No IT Involvement Needed | Direct prompting, custom Gems/GPTs, research automation | None—personal productivity tools |

| IT Review Appropriate | Read-only API integrations, data visualization dashboards | Security and access review |

| IT Partnership Required | Write-back integrations, automated data modifications | Full collaboration on design and implementation |

Recommended Approach:

- Build demonstration value first with no-IT-required tools

- Document time savings and quality improvements

- Approach IT with completed proof-of-concept requesting security review, not permission to begin

- Position as risk reduction: "Automated pull with proper access controls replaces manual copying of candidate PII into uncontrolled spreadsheets"

Supporting Data: In organizations where TA professionals built demonstration value before requesting formal approval, 82% received IT support for API projects. In organizations where TA requested upfront permission without demonstration, only 31% received approval to proceed.

Resource Allocation Challenges

The Barrier: Managers view tool-building as distraction from core recruiting work, particularly when measured on short-term metrics like requisition fill rates.

The Reality: Time investment in tool-building delivers 50:1 to 200:1 ROI within weeks. The compounding effect of time savings means first-year return typically exceeds 100x the initial investment.

Resolution Strategy:

-

Lead with ROI calculation

"This tool requires 2 hours to build. It will save 2 hours per week. Break-even occurs in week 1. Annual return: 100 hours saved." -

Start small enough not to require formal approval

Two hours on Friday afternoon doesn't require project approval. Demonstrate results before discussing expansion. -

Document and share impact in management terms

- Hiring velocity improvements

- Candidate experience scores

- Recruiter capacity increases

- Cost per hire reductions

Supporting Data: Teams that tracked and reported time savings monthly showed 3.2x higher continued investment in tool-building compared to teams that didn't quantify impact. Measurement drives continued support.

Cultural Resistance

The Barrier: Team members express concern about job security, skepticism about AI quality, or reluctance to learn new approaches.

The Reality: AI tools augment rather than replace TA professionals. Organizations implementing custom AI tools report zero involuntary headcount reductions in TA. Instead, teams redirect capacity toward higher-value activities, improving both job satisfaction and organizational impact.

Resolution Strategy:

-

Address job security directly with data

AI adoption in TA correlates with increased strategic importance of the function, not reduced headcount. The threat is not AI adoption but falling behind organizations that do adopt. -

Enable volunteer adoption rather than mandates

Identify early adopters, build tools for their specific problems, let demonstrated value drive organic adoption. -

Create psychological safety for experimentation

Celebrate learning regardless of outcome: "We built this tool, it didn't work as expected, here's what we learned" should be viewed as success.

Supporting Data: Organizations using volunteer-based adoption models achieved 78% team adoption within 6 months. Organizations using mandate-based adoption achieved 34% adoption over the same period, with significantly higher resistance.

The Future State: TA Team Structure Evolution

Based on current adoption trajectories and capability improvements in AI tools, we can project the likely evolution of talent acquisition over the next 24-36 months.

Emerging Role: TA Engineer

Organizations with 15+ recruiters are beginning to create specialized roles focused on tool development and maintenance. These are not traditional software engineers but analytically-oriented TA professionals who have upskilled into building and maintaining custom solutions.

TA Engineer Role Profile

| Aspect | Details |

|---|---|

| Background | Typically TA Operations professionals or analytical recruiters with 5+ years experience |

| Core Responsibilities | Design and implement custom workflow automation; Review AI-generated code for security and quality; Maintain documentation and training materials; Partner with IT on API integrations; Train TA team on new capabilities |

| Required Skills | Problem decomposition, API integration concepts, prompt engineering, data analysis, change management |

| Compensation Impact | 15-25% premium over traditional TA Operations roles |

Time Allocation Transformation

The shift in how TA professionals spend their time represents the most significant operational change:

Current State vs. Projected State (24 months)

| Activity | Traditional TA | AI-Augmented TA | Change |

|---|---|---|---|

| Administrative tasks (data entry, reporting, scheduling) | 70% | 25% | -64% |

| Candidate engagement (conversations, relationship building) | 20% | 55% | +175% |

| Strategic work (workforce planning, advisory) | 10% | 20% | +100% |

This reallocation has implications for candidate experience, hiring manager satisfaction, and the strategic influence of TA within organizations. The paradox is that increased automation produces more human experiences because TA professionals have capacity to invest in high-touch interactions at moments that matter.

Quantified Candidate Experience Improvements

Teams implementing custom AI tools report measurable improvements across candidate experience metrics:

Candidate Experience Improvements

| Metric | Typical Improvement |

|---|---|

| Time-to-first-response | 4.2 days → 0.8 days (81% reduction) |

| Rejection email personalization scores | +42% |

| Overall candidate experience NPS | +18 points |

| Candidate conversion rate (offer to acceptance) | +8% |

Required Skillset Evolution

Table Stakes by 2027:

- Basic prompt engineering (comparable to Excel proficiency today)

- Custom Gem/GPT creation and management

- API integration concepts (understanding what's possible, if not implementation)

- Data interpretation and validation

- AI output quality assessment and bias detection

Differentiating Skills:

- Custom tool development and deployment

- Multi-system integration design

- AI ethics and bias mitigation frameworks

- Change management for AI adoption

Organizations should begin upskilling programs now. The lag between starting skill development and achieving proficiency is 6-12 months. Waiting until these skills are required creates competitive disadvantage.

Governance and Risk Management: Critical Implementation Considerations

As TA teams build custom AI solutions, several governance and risk domains require systematic attention.

Data Privacy and Security Framework

Risk: Inadvertent exposure of candidate PII through inappropriate tool usage or implementation.

Data Privacy and Security - Mitigation Framework

| Step | Action | Frequency |

|---|---|---|

| 1. Data Classification | Define sensitivity levels (public, internal, confidential, PII) | One-time, update annually |

| 2. Tool Mapping | Map each tool to appropriate data sensitivity level | Per tool implementation |

| 3. Policy Establishment | PII never in public AI tools; use enterprise versions for confidential data | One-time, update as needed |

| 4. Usage Audits | Review actual tool usage against policies | Quarterly |

| 5. Pre-Deployment Review | Data handling assessment for all new tools | Per tool before deployment |

Compliance Checkpoint: GDPR, CCPA, and other privacy regulations apply to AI tools built by TA teams just as they apply to vendor platforms. The legal responsibility doesn't change with the implementation approach.

Code Quality and Maintenance Standards

Risk: AI-generated code containing security vulnerabilities, inefficient implementations, or insufficient error handling.

Mitigation Checklist:

- ✓ Designate code reviewers (TA Engineer or technical partner) for all API integrations

- ✓ Implement version control for all custom tools (Git or simple file versioning)

- ✓ Require documentation for every tool: purpose, data touched, usage instructions, known limitations

- ✓ Conduct security review before deploying tools that handle candidate data

- ✓ Establish sunset policy for tools no longer actively maintained

Key Principle: AI-generated code is not automatically secure or optimal. Human review remains essential.

Bias and Fairness Evaluation

Risk: AI tools perpetuating or amplifying bias in hiring decisions.

Bias and Fairness - Risk Assessment by Tool Type

| Tool Type | Bias Risk Level | Required Actions |

|---|---|---|

| Administrative automation (reporting, scheduling) | Minimal | Standard documentation |

| Content generation (emails, outreach) | Low | Tone and language review |

| Candidate assessment or screening | High | Rigorous bias audit, demographic outcome analysis, ongoing monitoring |

Critical Actions for High-Risk Tools:

- Conduct bias assessment before deployment

- Audit training data and prompt design for potential bias sources

- Compare outcomes across demographic groups (where legally permissible)

- Maintain human decision authority for all consequential hiring decisions

- Document AI's role in decision process for transparency and accountability

Conclusion: The Strategic Imperative for TA Leaders

The evidence is clear: talent acquisition teams that build custom AI solutions achieve significant competitive advantages in efficiency, candidate experience, and strategic impact. The time-to-value is measured in weeks, the ROI is demonstrably positive, and the technical barriers are lower than commonly perceived.

The strategic question for TA leaders is not whether this transformation will occur, but whether they will lead it or be disrupted by it. The window of opportunity exists because the technology has matured while adoption remains limited. Organizations acting now will establish capability advantages that compound over time.

The Implementation Path:

- This Week: Identify one high-impact problem (3+ hours weekly of manual work)

- This Month: Allocate 2-4 hours to build a minimum viable solution

- Next Quarter: Measure and document time savings for 4 weeks, share results with stakeholders

- Ongoing: Expand to next problem based on demonstrated ROI, build team capabilities systematically

The barriers are real but manageable. Thousands of TA professionals with non-technical backgrounds are successfully building custom AI tools. The question is not capability—it's commitment.

For organizations seeking structured support, the TA AI Community launching in Sweden provides frameworks, peer learning, and implementation guidance for TA teams building custom AI capabilities. Whether taking first steps with direct prompting or implementing sophisticated multi-system integrations, the community provides resources matched to current capability level.

The future of talent acquisition will be shaped by professionals who build custom solutions for their specific contexts, not by those waiting for universal platforms to solve unique problems. The tools are accessible, the framework is proven, and the opportunity is immediate.

The only remaining variable is your decision to begin.

Put the Builder's Framework into Practice with Castora

Building custom AI tools is powerful, but you don't have to start from scratch. Castora is purpose-built to solve three of the highest-impact challenges covered in this framework:

- Discovery: Transform vague job requirements into a precise Skills Compass in 10 minutes—no more guessing what you really need

- Design: Generate bias-checked interview kits with scoring rubrics in 15 minutes instead of 4-6 hours

- Reveal: Analyze candidate transcripts to find hidden gems with high learning agility that traditional hiring overlooks

Whether you're building your first custom AI tool or looking for a research-backed platform that embodies the builder mindset, Castora gives you immediate value without the implementation overhead.

Request Access to the Closed Beta

- ✅ Create your first Skills Compass in 10 minutes

- ✅ Generate bias-checked interview kits instantly

- ✅ Analyze candidates for future-proof capabilities

- ✅ Free access during beta period

Continue Reading

Explore more insights on modern hiring intelligence and talent acquisition:

- Castora: The Future of Hiring Intelligence — Discover how Castora revolutionizes hiring with AI-powered discovery, bias-free interview design, and future-proof candidate assessment.

More articles coming soon. Follow us on LinkedIn to stay updated.

Note: This article includes data from real implementations and case studies. All metrics and timelines are based on actual projects and measured outcomes.